Will a robot gain right from offbase? Endeavors to instill robots, heading toward oneself autos and military machines with a feeling of morals uncover exactly how hard this is

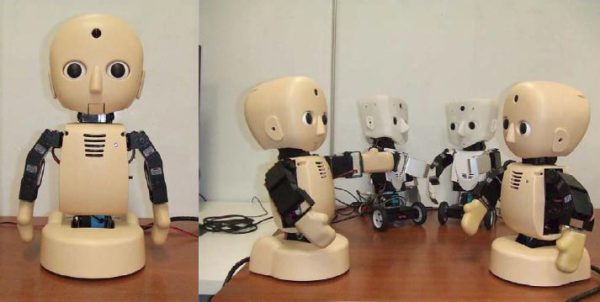

Will we show a robot to be great? Entranced by the thought, roboticist Alan Winfield of Bristol Robotics Laboratory in the UK manufactured a moral trap for a robot – and was staggered by the machine’s reaction.

In an investigation, Winfield and his associates modified a robot to forestall different machines – going about as substitutes for people – from falling into a gap. This is a rearranged form of Isaac Asimov’s anecdotal First Law of Robotics – a robot should not permit a person to come to mischief.

At the outset, the robot was fruitful in its errand. As a human substitute moved towards the gap, the robot surged into push it out of the way of risk. Be that as it may when the group included a second human substitute moving at the gap in the meantime, the robot was compelled to pick. Now and again, it figured out how to spare one human while letting the other die; a couple times it even figured out how to spare both.

Yet in 14 out of 33 trials, the robot squandered so much time fussing over its choice that both people fell into the gap. The work was displayed on 2 September at the Towards Autonomous Robotic Systems meeting in Birmingham, UK.

Winfield depicts his robot as a “moral zombie” that has no decision however to carry on as it does. In spite of the fact that it may spare others as per a customized set of principles, it doesn’t comprehend the thinking behind its activities. Winfield concedes he once thought it was unrealistic for a robot to settle on moral decisions for itself. Today, he says, “my answer is: I have no clue”.

As robots coordinate further into our regular lives, this inquiry will need to be replied. A heading toward oneself auto, for instance, might one day need to weigh the wellbeing of its travelers against the danger of hurting different drivers or people on foot. It might be exceptionally hard to program robots with tenets for such experiences.

Anyhow robots intended for military battle may offer the start of an answer. Ronald Arkin, a machine researcher at Georgia Institute of Technology in Atlanta, has fabricated a set of calculations for military robots – named a “moral representative” – which is intended to help them settle on savvy choices on the war zone.

He has effectively tried it in mimicked battle, demonstrating that automatons with such programming can pick not to shoot, or attempt to minimize losses amid a fight close to a range ensured from battle as per the standards of war, in the same way as a school or clinic.

Arkin says that outlining military robots to act all the more morally may be low-hanging tree grown foods, as these tenets are well known. “The laws of war have been considered for a huge number of years and are encoded in bargains.” Unlike human contenders, who can be influenced by feeling and break these tenets, machines would not.

“When we’re discussing morals, the greater part of this is to a great extent about robots that are created to capacity in really recommended spaces,” says Wendell Wallach, creator of Moral Machines: Teaching robots right from offbase.

Still, he says, tests like Winfield’s hold guarantee in establishing the frameworks on which more intricate moral conduct can be assembled. “In the event that we can get them to capacity well in situations when we don’t know precisely all the circumstances they’ll experience, that is going to open up endless new applications for their use.

Saturday 27 July 2024